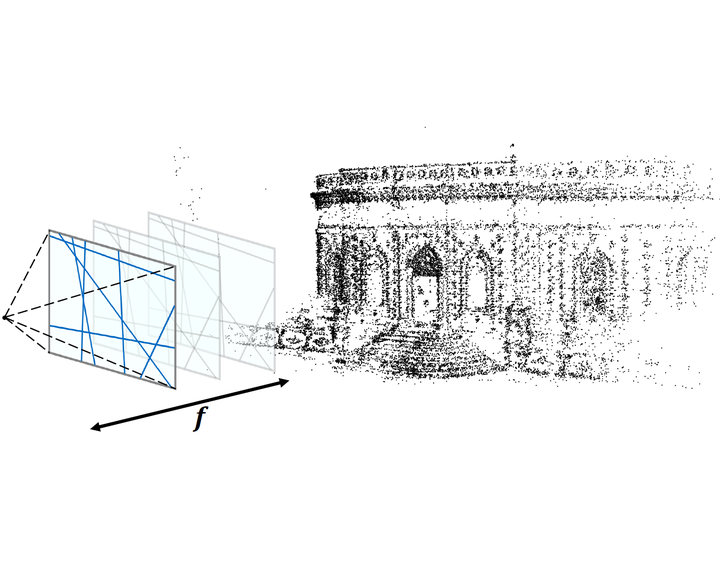

Recent works on localization and mapping from privacy preserving line features have made significant progress towards addressing the privacy concerns arising from cloud-based solutions in mixed reality and robotics. The requirement for calibrated cameras is a fundamental limitation for these approaches, which prevents their application in many crowd-sourced mapping scenarios. In this paper, we propose a solution to the uncalibrated privacy preserving localization and mapping problem. Our approach simultaneously recovers the intrinsic and extrinsic calibration of a camera from line-features only. This enables uncalibrated devices to both localize themselves within an existing map as well as contribute to the map, while preserving the privacy of the image contents. Furthermore, we also derive a solution to bootstrapping maps from scratch using only uncalibrated devices. Our approach provides comparable performance to the calibrated scenario and the privacy compromising alternatives based on traditional point features.